Lecture Notes

Economics of Sustainability

K Foster, CCNY, Spring 2014

Table of Contents

Commodities and Goods/Services

Basics of Supply and Demand Curves

Analyzing Supply and Demand Curves

Individual Demand to Market Demand

Intertemporal Choice and Discount

Rates

Production

Possibility Frontier (PPF)

Consumer

Choice and Fees/Taxes

Appendix:

A reminder about Percents and Growth Rates

Important

Conditions for Competition

Rival and/or Excludable Goods versus

Pure Public Goods

Sustainability

and Sustainable Development

Sustainability and Economic Growth

Profit Maximization with

One Input

Profit Maximization with Multiple

Inputs

Cost Minimization/Profit

Maximization

Hotelling on Resource Extraction

Prisoner's Dilemma and Cartels

Hicks-Marshall rules of Derived

Demand:

Supplementary Material for Advanced

students

Choice of Dumping or Safe Disposal

When Costs & Benefits are

Imperfectly Known (i.e. The Real World

)

Extreme Case 1: Threshold

effects of pollution

Extreme Case 2: Constant

Marginal Damages

Irreversibility and

Precautionary Principle

Actual Behavior of People

making Choices under Uncertainty

Background

on Global Climate Change

Financial Markets and Securities

Urban

Policy in Response to Climate Change

Introduction

This class aims to teach at least three different things which are interrelated enough to make it sensible enough to jam into one class, but different enough to make it all complicated. These are:

1. Basic economics of sustainability and environment,

2. Basic business principles as applied to environmental enterprises.

3. Basic development concepts to understand the problem of global climate change,

The current version of these notes tries to cover part 1. Parts 2 and 3 will be later (??).

These notes are based on a number of different texts including Principles texts by Frank and Bernanke and by Mankiw, Intermediate text by Varian, finance text by Hull, environmental texts by Anderson, by Kolstad, and by Hanley, Shogren and White.

Basics

Although there are hard-core environmentalists who dispute it, believe that markets are the best way ever discovered by human ingenuity to efficiently allocate scarce resources and to ensure that resources are most effectively used. This is true for most resources but not all.

It is not true for all resources; this does not mean that no government intervention is ever justified. One of the objectives of this course is to figure out what institutional arrangements and structures allow markets to work, and which ones need to be improved. Where should government policy step in?

But a typical firm is run by managers who have a sharp incentive to cut costs: to limit the use of expensive inputs and to cut expenditures which do not directly impact customer satisfaction. Most consumers are looking for ways to cut their expenditures on items that do not bring adequate satisfaction.

So to begin, we will review basic economic theory about the allocation of scarce resources. In a perfect economy people don't need to understand all the implications of their consumption on different resources; they only need to know the price. The price is the sole sufficient indicator of scarcity. So much energy is expended by modern consumers trying to balance off different criteria, even for simple choices like a lightbulb. An incandescent bulb uses 'too much' energy relative to a fluorescent, but fluorescent bulbs usually contain mercury (hazardous disposal), other types of bulb might consume particular resources (rare earth metals) in being made. How ought consumers to trade off greater electricity usage versus mercury contamination? A consumer can be left swamped with information, trying to compare the incommensurable! But in a perfect economy consumers only need to look at the price. Clearly we don't live in a perfect economy.

But many resources are already included in the price of even the most quotidian consumption item. When we choose to buy an apple we needn't worry about whether the farmer has sufficient land or uses the proper fertilizer, or if the wholesaler has a good enough inventory-control system, or if the retailer uses scarce real estate optimally. We just choose whether or not to buy it. It's only when we try to trade off between organic apples or locally-grown apples or fair-trade apples or whatever that's difficult, because there's no single scoring system.

In a system of optimal economic competition, the price reveals relative scarcity. If supply is low relative to demand then the price will be high; if supply is great relative to demand then the price is low. Early economists often wrote about the apparent incongruity that water, necessary for life, was available for free while diamonds, not necessary for anything, were expensive. Why this apparent paradox? Because of their relative scarcity. (And thus marginal utility, but that's for later.)

Over a longer time period, firms will direct their Research & Development (R&D) budgets towards economizing on items which are most scarce (i.e. have high prices) again, just because it's profitable for them to do so.

These market processes are the basis for extraordinary wealth. For much of human history a person needed to work all year just to get enough calories to fend off starvation. Nowadays the developed world worries most about obesity.

Markets are extraordinarily powerful. Recall that many countries experimented with central planning (called Communism) and that was a disaster. The best efforts by very smart people (motivated, at times, by fear for their lives) were not enough to supply even a fraction of the goods that could be provided by a market economy. Wise policy will use markets wherever possible. However markets are neither all-powerful nor omniscient. There will be cases where the simple assumptions underlying the Welfare Theorems are no longer valid, particularly where there are substantial amounts of goods with imperfect property rights (with externalities) and/or substantial transactions costs. Bob Solow, the Nobel-prize-winning economist, refers to the free-marketeers who see the doughnut while the interventionists see the hole (Solow 1974 AER).

(e.g. Brad Delong delong.typepad.com/sdj/2010/12/what-do-econ-1-students-need-to-remember-second-most-from-the-course.html)

Define Economics

"Economics is the study of choice in a world of scarcity" (from intro text by Frank & Bernanke yes, that Bernanke, who's just stepped down as Fed Chair)

- Some resources, which were once thought to be inexhaustible, are now known to be scarce; e.g. atmosphere (CO2 levels), clean air, fish in the sea

- Scarcity: No Free Lunch (TANSTAAFL) more of one thing means less of something else. This applies to buying groceries (more apples & fewer bananas) or choosing between car emissions & safety (lighter cars mean better MPG & less emissions but also less safe in accident).

- Choice: people are free agents who take actions based on their own information and desires which do not necessarily match those of policymakers. Usually assume people are rational.

- Rational people think on the margins (Mankiw's intro text)

- Cost-Benefit Principle: it is rational to take action if and only if the extra benefits are as big as, or bigger than, the extra costs

- Economic Surplus = Extra Benefit Extra Cost. So Cost-Benefit Principle can be restated as "Do actions with nonnegative Economic Surplus".

- Opportunity Cost: The Extra Cost is the value of next-best alternative that must be given up to do something so Cost-Benefit means take an action only if it has nonnegative Economic Surplus; only if the extra Benefit exceeds the Opportunity Cost

- If prices reflect true scarcity of all goods then people take proper account, not because of any moral feeling but to maximize profit. This goes back to Adam Smith's propositions and observations.

- Environmental Economics is generally concerned with choices where the benefits and costs are shared even though the decision-making isn't necessarily

Here's a nice overview of Environmental Economics

Commodities and Goods/Services

· People buy and sell a multitude of different goods and services, many of them extremely specialized.

· Commodities are generalized goods, items that have been laboriously standardized in order to make them comparable.

· Commodities are created by people in particular situations (commoditization) for example, the cafeteria buys apples as commodities by the thousand but then these same apples are chosen as individual goods (look for the ripest and least bruised fruit on display).

· Example, WTI Light Sweet Crude Oil (http://www.cmegroup.com/trading/energy/crude-oil/light-sweet-crude.html) is traded in units of 1000 barrels (each barrel is 42 gallons), delivered in Cushing Texas, where "light" and "sweet" are carefully defined physical qualities. Many lawyers worked to write up the documents that define this commodity and specify how variations are recompensed. Some details are in Chapter 200 (!) of the basic NYMEX rulebook http://www.cmegroup.com/rulebook/NYMEX/2/200.pdf. Oil companies work hard to ensure that a particular quantity of oil meets these standards.

· An exchange might create a new commodity that doesn't exist, such as "Crack Spread," the difference of crude prices and the value of the refined products.

Basics of Supply and Demand Curves

· Demand Curve:

o For each person: shows the extra benefit gained from consuming one more unit

o by Cost-Benefit Principle, if the extra benefit from consuming one more unit is greater than the price, then consume; if not then don't

o so Individual Demand Curve shows how many are purchased at any given price

o Individual Demand Curves are combined to get a market demand curve of how many would be purchased by all the people in the market at a given price (horizontal sum)

o Depend on other factors than price (which shift the demand curve).

· Supply Curve: opportunity cost of producing certain quantity of output.

o If no fixed costs and no barriers to entry then firms produce at marginal cost

o Depend on other factors than price (which shift the supply curve).

· Behavior of Markets: markets are a wonderful institution; we analyze with some assumptions

o Depend on composition of good

o Depend on supply characteristics (how many firms, if there are fixed costs or other barriers to entry, rules & regulations and social norms

o property rights are completely known, specified & enforceable

o all property rights are exclusive (no externalities)

o property rights are transferable

o items for sale have substitutes

o Commodities closely approximate these assumptions; other markets might be very far off (e.g. labor)

o What happens if demand is greater than supply? Vice versa?

· Equilibrium: price and quantity that have no tendency for change

· Some Common Mistakes

o Ignore Opportunity Costs

o Fail to Ignore Sunk Costs (since they're no longer on the margin)

o Fail to understand Average/Marginal Distinction

Jodie Beggs "Economists Do It With Models" on demand curves (follow youtube links for next lectures on supply; also Chapters 4, 5, 6 and 7 here, http://www.economistsdoitwithmodels.com/economics-classroom/)

Analyzing Supply and Demand Curves

· Consumer Surplus (CS)

You've surely had the experience: you go to a store to buy a particular item, ready to spend a certain amount of money. But surprise! You find it's on sale and you pay less than you expected. You've gotten Consumer Surplus. This did not come from the benevolence of the retailer (although they might try to convince you otherwise). This actually was a mistake by the retailer: they were targeting people whose choice could be influenced by the price reduction but accidentally got you too. You got a benefit from the fact that other people shop smart, with a keen eye on prices charged. You would have been willing to pay more, but because there's a market you paid less.

Take all of the people who would have been willing to pay more than the actual market price and add up how much they each benefited. This total amount is CS: the area under the demand curve and above the market price. Consumers were willing to pay more than the market price; their marginal benefit from consuming those goods was above the price they paid, so they gained from this market.

Examples: online websites, from eBay to used cars, allow people to see the prices paid for other similar products. Compare with buying a used car without internet research must go to each dealer and haggle; don't know if price is good or bad without substantial experience.

This could sound like an abstract concept, but ordinary people have an intuition of it. For example, people regularly pay a flat fee to join a "warehouse club" like Costco. They benefit from shopping at lower prices (i.e. they get consumer surplus) and are willing to pay for that benefit as long as their payments are less than the benefits, of course.

· Producer Surplus (PS)

Producers also gain from a market. You are a producer and seller of your own labor. If you applied for a job and would have accepted a pretty low wage but you were surprised and the company offered you a better wage than you would have accepted then you got Producer Surplus. You benefit from the fact that there is a market with competitors trying to buy the product.

Find the difference between the lowest price that the producer would have accepted (supply curve) and the actual price received. Add these all up for PS: the area above the supply curve and below the price is Producer Surplus. Producers were willing to accept less than the market price; their opportunity cost was lower than their revenue so they gained from the market.

Examples: In a natural resource case, a dairy farmer might be willing to sell milk at even a very low price because the milk is tough to store and spoils quickly. But in a large market the milk can find a buyer at a decent price so the farmer gets PS. A mine where the ore is near the surface and easily accessible would sell the product even at a very low price. But the market offers a higher price because buyers compete for it, so the existence of the market provides a benefit to the producers.

· Pareto Improving Trade: a trade that makes both sides better off. If markets allow all Pareto-Improving trades then the market maximizes Total Surplus (= sum of Consumer Surplus plus Producer Surplus)

Example from Economist, "Economics Focus: Worth a Hill of Soyabeans," Jan 9, 2010 (on Blackboard and InYourClass.com).

· Deadweight Loss (DWL): a loss that is nobody's gain.

Example: Traffic to get over a bridge. Everybody pays a price of lost time and aggravation but this cost is nobody's gain. If everybody paid an equivalent price in money (as a toll) then this cost would be somebody's gain (the government, the public, and/or politicians' cronies).

This is one of the less widely-understood concepts; for example take the voters' dislike to road pricing here in NYC

· Price floor/ceiling effects examples where Total Surplus is smaller & there is DWL; "Short side rules"

· Effects of changes in demand or supply

· Private equilibrium leaves no unexploited opportunities for individuals (no-cash-on-the-table); but might leave opportunities for social action. (See Yoram Bauman, the Stand-up Economist in AIR or on youtube here or here)

·

Elasticity allows easy characterization of how

changes in demand or supply affect market; is

· Elasticity works in both directions:

if amount supplied were to fall by 10%, what would happen to price?

if price rose by 5%, what would happen to the amount demanded?

Example

of analysis by Jim Hamilton (EconBrowser Jan 15, 2012): what would be the

effect of an embargo on Iranian oil shipments?

If Iran is about 5% of global market and elasticity is something like Ό

to 1/6 or even 1/10, then this means a 5% drop of supply would produce a 20-30%or

even (worst case) 50% increase in crude oil prices.

· Cross-Price Effects

Finally check the effects of a

change in the price of one good on the consumption of the other good, so ![]() . If this cross-price

effect is positive then the goods are substitutes: an increase in the price of

one leads consumers to buy more of the other instead (chicken vs beef). If the cross-price effect is negative then

the goods are complements: an increase in the price of one leads consumers to

cut back purchases of several items (hamburgers and rolls).

. If this cross-price

effect is positive then the goods are substitutes: an increase in the price of

one leads consumers to buy more of the other instead (chicken vs beef). If the cross-price effect is negative then

the goods are complements: an increase in the price of one leads consumers to

cut back purchases of several items (hamburgers and rolls).

· Elasticity: when a price rises from p to p', so demand changes from x to x'

linear

Linear elasticity

is  or

or  .

.

point

As p' and p get closer and closer

together (so that x' and x get closer as well), then the term, ![]() so that the elasticity formula can be written

as

so that the elasticity formula can be written

as ![]() (and recall that x is

a function of p). For a linear demand

curve, note that elasticity is not constant.

The slope of a line is constant, then

(and recall that x is

a function of p). For a linear demand

curve, note that elasticity is not constant.

The slope of a line is constant, then ![]() is constant but

elasticity is this constant times

is constant but

elasticity is this constant times ![]() , which is the slope of a ray from the origin to the point

under consideration.

, which is the slope of a ray from the origin to the point

under consideration.

Example of supply

curve - oil

Showing that as the price of oil increases, more becomes economical to produce.

from Saudi Aramco,

http://www.world-petroleum.org/docs/docs/publications/2010yearbook/P64-69_Kokal-Al_Kaabi.pdf

Individual Demand to Market Demand

· horizontal sum

At a quoted price, each person chooses to demand a certain quantity of the good (which might be zero). So if there are 3 people, A, B, and C,

At a price above P1, only person B is in the market, so the market demand is just her demand. At a price lower than P1 but above P2, a reduction in price will prompt both B and C to demand the good. At a price lower than P2, all three people A, B, and C, are in the market. So a reduction in price induces all three to demand more. The market demand curve becomes more elastic since now a fall in price means ΔxA + ΔxB + ΔxC. The market elasticity arises both from intensive changes (each person's demand changes) and extensive changes (people enter or leave the market in response to price changes).

Intertemporal Choice and Discount Rates

In general people value a sum of money paid in the future less than a sum of money paid now. This is represented by a "discount" factor: $100 in the future is worth $100*D now, where D<1.

The reason for this goes back to one of the most basic propositions of economics, opportunity cost. A thing's value is its opportunity cost, what must be sacrificed in order to get it. The opportunity cost of $100 in one year is not $100 now I could put less than $100 in the bank, get paid some interest, and end up with $100 after one year. How much would I have to put in now? If I put $Z into the bank then after a year I would have $Z(1+r), where r is the rate of interest. Set this equal to $100 and find that Z=100/(1+r).

A common misconception is that this is about inflation it's not! A world with perfect zero inflation could still have positive interest rates, so money in the future would be worth less than money now. Economists distinguish between the real rate of interest and the nominal rate of interest; the real rate of interest is the nominal rate minus the inflation rate. For example, if your money grew by 8%, but inflation made each dollar 5% less valuable, then the real rate of interest would be just 3%. (Interestingly, this works in reverse just as well: a country with deflation, where currency can buy more, could have a real rate of interest above the nominal rate.) We'll usually focus on the real rate here, net of inflation.

Why is the interest rate at the level that it is? We can accept the logic of opportunity cost, given above, but still ask why the interest rate is set at some level. Over history it has been level for long stretches of time; the prevalence of anti-usury laws and religious prohibitions would imply that questions about the proper level of interest rates have been common. Part of the answer is that people are impatient: we all want more now! Children are extremely impatient (most hear "wait" and "no" as synonyms); maturity brings (a little bit) more patience. Then there is the demand from entrepreneurs, people who have a good idea and need capital. On the supply side there are many people who want to smooth their consumption over their lifetime: save when they have a high income so that they can retire.

The logic of opportunity cost holds just as much for government policy as for individual choice. A government trades off money now versus money in the future. What is the appropriate rate that they should use? Should the government act like an individual? But it lives longer than any individual does that matter?

Of course people make all sorts of crazy decisions and there are a variety of psychological experiments that show this. For instance, offered a choice about being paid, subjects were asked to choose either to get $10 tomorrow or $12 in a week; alternately they were offered $10 in one week or $12 in two weeks the choices should be the same but systematically aren't. People are willing to wait if the waiting is postponed. (Males who are shown porn subsequently act with a much higher discount rate; females don't seem to be so simple-minded.)

This calculation to figure discount rates is straightforward for time horizons for which we observe prices: there are very popular markets for financial securities such as Treasury bonds offering payments of money as far as 30 or even 50 years into the future. But how do we discount money farther into the future, perhaps at some point beyond the lifetime of anyone currently alive?

A few factors might be considered relevant. First, we might consider that in the future there will likely be more people the world's population keeps increasing (although most projections show that it will eventually level off at something like 10 or 11 billion). But if there are more people around to share the burden, then a dollar, when the population is twice its current level, should be worth around half of a dollar today. Second, economic growth (partly through the steady accumulation of technology) will mean that future generations will be richer than current generations, so again a dollar to a rich person (in the future) could reasonably be considered to be worth less than a dollar today (to the relatively poorer).

Finally the impatience of the current population must be taken into account, although this calculation is fraught. On one hand, we want to model the way people make decisions, and it is surely true that people are impatient. But is this a form of discrimination against the unborn? Nordhaus gives a convincing argument about taking account of the actual preferences of actual people; Stern argues from a lofty perspective about what the discount ought to be, based on ethical values. There is no single easy answer.

The broad question is whether policymakers ought to discount in this way. Is it ethical for a society to take on expensive debts? (Again, many governments do. However this is irrelevant to deontology.) This question is large and multi-faceted; a paragraph cannot do justice to either side of the argument. To make the problem most pointed: some government spending can save lives so a discount rate, applied to government spending choices, means that government is willing to save fewer than 100 lives today, in return for sacrificing 100 lives in the future. These sorts of questions have dogged philosophers for ages and we've mostly abandoned any hopes of coming up with a solution that could be broadly agreed upon. (Ethical questions are often put in railroad terms: you control a switch that can change the track upon which a runaway locomotive will roll; would you switch from killing 2 people to killing one person? What if the act of controlling the switch involved murdering someone? This is how philosophers while away the hours.) But the lack of clear moral guidance about the single right choice does not allow us to postpone these decisions.

Government policy chose to build transportation infrastructure in NYC such as airports and highways, which increase current well-being, at the expense of poverty-reduction or poverty-alleviation in the past. Was that right? Is it better, if the government has $1bn dollars to spend, to vaccinate children or build bridges or abate CO2 emissions?

In all of this, we note that governments must make choices to spend more money now even if it means spending less money later. We attempt to describe this trade-off with discount rates. A higher interest rate means that future outcomes receive less weight; you can think of it as a "hurdle rate" for public projects. If the future is discounted at 4%, fewer projects will clear the hurdle than if the rate is 2% or 1%.

Terminology: a "basis point" is one-hundredth of a percentage point. So if the Fed cut rates by one half of one percent (say, from 4.25% to 3.75%) then this is a cut of 50 basis points (bp, sometimes pronounced "bip") from 425 bp to 375 bp. Ordinary folks with, say, $1000 in their savings accounts don't see much of a change (50 bp less means $5) but if you're a major institution with $100m at short rates then that can get into serious money: $500,000.

Rate of Compounding

Sometimes use continuously-compounded interest, so that an amount invested at a fixed interest rate grows exponentially. Unless you've read the really fine print at the bottom of some loan document, you probably haven't given much thought to the differences between the various sorts of compounding annual, semi-annual, etc. Do that now:

|

If $1 is invested and grows at rate R then |

annual compounding means I'll have |

(1 + R) after one year. |

|

If $1 is invested and grows at rate R then |

semi-annual compounding means I'll have |

|

|

" |

compounding 3 times means I'll have |

|

|

|

|

|

|

" |

compounding m times means I'll have |

|

|

" |

|

|

|

" |

continuous

compounding (i.e. letting |

eR after one year. |

This odd irrational transcendental number, e, was first used

by John Napier and William Outred in the early 1600's; Jacob Bernoulli derived

it; Euler popularized it. It is ![]() or

or ![]() . It is the expected

minimum number of uniform [0,1] draws needed to sum to more than 1. The area under

. It is the expected

minimum number of uniform [0,1] draws needed to sum to more than 1. The area under ![]() from 1 to e is equal

to 1.

from 1 to e is equal

to 1.

Sometimes we write eR; sometimes exp{R} if the stuff buried in the superscript is important enough to get the full font size.

Since interest was being paid in financial markets long before the mathematicians figured out natural logarithms (and computing power is so recent), many financial transactions are still made in convoluted ways.

For an interest rate is 5%, this quick Excel calculation shows how the discount factors change as the number of periods per year (m) goes to infinity:

|

m per year |

(1+R/m)^m |

Discount Factor |

|

1 |

1.05 |

0.952380952 |

|

2 |

1.050625 |

0.951814396 |

|

4 |

1.0509453 |

0.951524275 |

|

12 |

1.0511619 |

0.951328242 |

|

250 |

1.0512658 |

0.95123418 |

|

360 |

1.0512674 |

0.951232727 |

|

|

|

|

|

Infinite |

1.0512711 |

0.951229425 |

So going from 12 intervals (months) per year to 250 intervals (business days) makes a difference of one basis point; from 250 to an infinite number (continuous discounting) differs by less than a tenth of a bp.

This assumes interest rates are constant going forward; this is of course never true. The yield curve gives the different rates available for investing money for a given length of time. Usually investing for a longer time offers a higher interest rate (sacrifice liquidity for yield). Sometimes short-term rates are above long-term rates; this is an "inverted" yield curve. Nevertheless for many problems assuming a constant interest rate is not unreasonable.

Do people behave quite in the way that this assumes? In some senses, yes: they generally value future benefits less than current benefits. However they do not do this uniformly: there is generally a conflict between how impatient people actually are, versus how impatient they want to be.

Discounting over generations gets more complicated since we can no longer appeal to individual decisions as a guide. Some people argue a link to social valuation across current incomes. Arguing that current generations ought to sacrifice for the good of future generations (for example by mitigating climate change) is a statement that the poor (people living today) ought to make sacrifices for the rich (people in the future). We can observe policy choices about the relative interests of poor and rich people now; for example social payments such as welfare and unemployment payments can be viewed as insurance paid by rich to help the poor. We observe different societies making different choices about this tradeoff.

Can read Tyler Cowen's article

in Chicago Law Review (online).

|

|

|

On using these Lecture Notes: We sometimes don't realize the real reason why our good habits work. In the case of taking notes during lecture, this is probably the case. You're not taking notes in order to have some information later. If you took your day's notes, ripped them into shreds, and threw them away, you would still learn the material much better than if you hadn't taken notes. The process of listening, asking "what are the important things said?," answering this, then writing out the answer in your own words that's what's important! So even though I give out lecture notes, don't stop taking notes during class. Take notes on podcasts and video lectures, too. Notes are not just a way to capture the fleeting sounds of the knowledge that the instructor said, before the information vanishes. Instead they are a way for your brain to process the information in a more thorough and more profound way. So keep on taking notes, even if it seems ridiculous. The reason for note-taking is to take in the material, put it into your own words, and output it. That's learning. |

|

|

Production Possibility Frontier (PPF)

In analyzing choices we distinguish between what is possible and what is desirable; an optimal choice balances these two considerations. To analyze what is feasible or possible we sketch a Production Possibility Frontier.

The Production Possibility Frontier (PPF) represents the combinations of two goods which can possibly be attained. (The PPF shows the maximum; certainly less of both is possible!)

For example, politicians debate the tradeoff between cheap oil/gas (Drill Baby Drill!) and a clean environment. We can represent this tradeoff as

This shows that a society could have a completely clean pristine environment with zero cheap gas (where the PPF intersects the vertical axis). Or an utterly dirty environment and ultra-cheap gas (where the PPF intersects the horizontal axis). We would never want to be interior to the PPF, since this would mean that society could have more of both without any sacrifice. It is a frontier because anything beyond it is infeasible; anything within it is inefficient. Changing technology would allow the PPF to move outward so that society could have more of both.

The opportunity cost is proportional to the slope of the PPF. The slope changes depending on how much drilling or environment we already have. If we already have a very clean environment with a low level of cheap gas (at a point near the upper left of the PPF), then getting even cleaner (moving up and left) requires a huge reduction in cheap gas to get only a small improvement in clean environment the opportunity cost of the last bits of environment is huge. Oppositely, if we have a lot of cheap gas but little clean environment (we're on the lower right), then cleaning up some means a small sacrifice of cheap gas (a low opportunity cost). People can have different preferences about what sacrifice is reasonable and so where on the PPF the society ought to be.

From the PPF we can immediately define the opportunity cost: how much does a completely unspoiled landscape "cost"? The value of the gas which must be foregone. How much does gas "cost"? The value of the habitat spoiled. If choices must be made between the two priorities then every step toward one priority means some diminution of progress to the other priority.

Many examples: a lake can be used for recreation or reservoir of water supply; rainforest can be used for biodiversity or crops; land can be mined or left open; coast used for wind farm or beautiful scenery; etc. Application to Global Climate Change.

Indifference Curves

We analyze the choice of an individual balancing two desired outcomes. There are some cases where both outcomes are easily achieved; here economics has little to add. There are other cases where there is a trade-off, where progress toward one goal must mean that the other goal becomes farther off. These cases are more difficult.

Consider the choices of people who like forests for recreational use (including habitat preservation) as well as for a source of logs (supporting the local economy). We will shorten these two outcomes as "animals" and "logs".

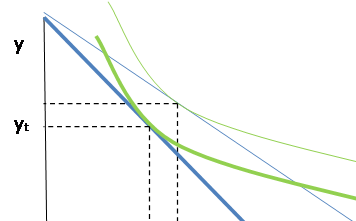

Start from a particular point, where there is some amount of both logging and preservation, so point A:

Assuming the person likes both logging and preservation of habitat, any combination (such as B) that gave more of both would be preferred; any combination (such as C) that gave less of both would be less preferred (the dotted vertical and horizontal lines through A mark the current amounts of logs and animals).

Preferences get complicated when we ask how a person would trade off one good for another. What increment more wildlife habitat (more animals) would balance slightly less logging? Call this point D. What increment more logging would balance slightly less habitat? Call this point E.

Connect together these points into a smooth curve, which we call an "indifference curve" because the person is indifferent between the various options.

One person's preferences might look like this:

which implies that this person likes both logs and animals. Indifference curves above are preferred; indifference curves below are less preferred.

Different people might have different preferences. This person likes animals and cares very little about logs:

While this person cares about logging jobs and not much at all for animals or habitat:

Horizontal or vertical curves would represent complete lack of caring for a particular outcome. This might accurately represent the views of some people on the extremes.

Why do we usually sketch the indifference curves as bowed? This is again an assumption about behavior on the margin. Return to an individual with preferences that are not too extreme,

From a point in the middle, such as point F, the person might make an almost equal tradeoff a 1% diminution of habitat for a 1% increase in logging (for instance). However as the person moved upwards and leftwards (toward G), they might want a greater compensation of logging increase for equal diminutions of animal habitat. If there is a giant park then people might be willing to allow logging in a few areas but as the size of the wilderness shrinks, they become less willing to give up the remaining bits. Oppositely as the choices move from F toward H: more and more habitat is protected and so becomes less valued. This is the principle of diminishing marginal utility. (Diminishing marginal utility is the idea that, when I'm thirsty, that beer tastes great; when I've already had a few, I don't get quite as much enjoyment from one more beer.)

Note on Aggregating Preferences: although we derived a market demand curve from individual demand curves above, aggregating indifference curves is not so easy (in fact it's generally impossible!). Aggregating PPFs is simple, though.

Optimal Choice

Make the (not entirely serious) assumption that we have some units to measure "animals" and "logs". Starting from a value of zero logs and all animals, suppose we reduced the number of animal units by one? How many more logs could we get? This gives the opportunity cost of the last unit of animals.

But compare this high cost with the cost (in log units) of reducing the amount of animal, if the amount of animal is already small:

Somehow the society must figure a way to bring these two considerations of production possibilities and choice into equilibrium, to find the tangent of PPF and indifference curve:

A rational maximizing individual who does all of the production by him or herself, and knows his or her own indifference curves, would make this choice. In a world where production and consumption are separated, each side sees only the price,

So producers see only the relative price of a to l but choose optimally; consumers see the relative price and also consume optimally.

Consumer Choice and Fees/Taxes

There may be cases where policymakers are reluctant to impose fees for worry about the distributional impacts. For example, water pricing may lead to more efficient outcomes but this could lead to the poorest people suddenly facing a steep price hike for a necessary good. A gas tax, carbon emissions permits, and other programs all have this feature.

The simple way to fix this is to rebate the tax revenue to each person (but regardless of how much was purchased). It might seem that this would undo the effect entirely but with some basic micro we can show that although the increased income will stimulate spending on the good , nevertheless the price rise will diminish spending (this is the Slutsky decomposition of substitution and income effects).

Consider a typical consumer who chooses between good X and good Y (where Y is a composite of all other goods). Assume the price of Y is $1 and the price of X is P. The consumer has income of M. Then her budget constraint looks like this:

x x*

And assume she chooses the point, (X*,Y*) as indicated.

Now a tax of T on good X would result in a rise in the price of X to (P + T) and shift her budget set inward, getting her to a lower utility level:

Now suppose that some of the revenue from this tax were rebated, to raise the person's income from M to M' to make the old X*,Y* just affordable.

Then the person is no worse off but still is using less of the good x through only the substitution effect not the income effect.

Further refinements could adjust the marginal prices so that, for example, the first few units are available at a low cost while remaining units are more costly. This would provide people with a minimum level of the good without substantial deleterious effects on efficiency.

|

|

Appendix: A reminder about Percents and Growth RatesA percent is just a convenient way of writing a decimal. So 15% is really the number 0.15, 99% is 0.99, and 150% is 1.50. When you remove the " % " sign you have to move the decimal point two digits to the left. This can be particularly confusing with single-digit numbers where the decimal point is at the end and therefore omitted: 5% represents the number 0.05 and 1% represents 0.01. If there is already a decimal point then it moves two places: 0.5% is therefore the number 0.005. In Macroeconomics this can get confusing since US inflation data is commonly reported as, for example, "0.2%" last month. This means that typical prices increased by 0.002. If A is half the size of B then we can say that A is 50% of B. If it were a quarter of the size, it would be 25%. If a number is increasing then there are many ways of expressing this. Sometimes we say that Z is 125% as large as Y; this is the same as saying that Z is Y plus a 25% increase. You can see this from the decimals: 125% = 1.25 = 1 + 0.25, so it is equal to one plus 25%. This can also get confusing when finding percentages of percentages. Many stores try to fool people with this: they offer "50% off and then take another 25% off additionally!" Does this mean that you get 75% off the regular price? No! Think for a minute: if they offered "50% off and then take another 50% off additionally," would that mean that they were giving it away for free? No, they're taking half off and then another half off so you get it for a quarter of the original price (since ½ * ½ = Ό or 0.5 * 0.5 = 0.25). So offering "50% off and then take another 25% off additionally!" means you get 0.50 off and then another 0.50 * 0.25 = 0.125 off, so the total is 0.50 - 0.125 = 0.375, which is 37.5% of the original price. For instance, we might want to find 10% of 10%. We CANNOT just multiply 10*10, get 100, and leave that as the answer! Rather we first convert them to decimals and then multiply: so 0.10 * 0.10 = 0.01 = 1%. So if I want to know, for instance: · 4 is what percent of 25? I'd divide 4/25 = 0.16 so 16%. · If some country has GDP of $125 bn and invests $33bn, what is its investment rate? 33/125 = .264 so 26.4%. · A state had 47.3m jobs; employment grew at 2% so how many jobs does it have now? 47.3(1+.02) = 48.2 m jobs. You can see from the examples that one of the other good things about percentages is that we don't have to worry about units. If the top and bottom are both expressed in the same units then the percentage is unit-less. In economics the data are commonly used to try to persuade you to think one thing or another. Therefore, even if someone's not just outright lying, they're often telling you about the data in a way that persuades you one way or another. Whether it's stores and companies or politicians, they're trying to play with the data so you've got to be careful not to get played. Here's another example. Would you rather invest your money in a bank account that paid 12% in interest each year; or one that paid 1% each month of the year? If you don't care about the difference then you're losing money. Take, for example, if you had $1000 to invest. If you invested it in the first account you'd end up with 1000(1 + 0.12) = $1120, which is an increase of $120 in the year. But if you got 1% each month, then after the first month you'd have 1000(1 + 0.01) = $1010. This amount would be reinvested in the second month so you'd have 1010(1 + 0.01) = $1020.10. Note that since 1010 = 1000(1 + 0.01), we could re-write 1020.10 = 1000(1 + 0.01)(1 + 0.01) = 1000(1 + 0.01)2. So after three months you'd have 1000(1 + 0.01)3, after four months you'd have 1000(1 + 0.01)4, after five months you'd have 1000(1 + 0.01)5, and, well, I hope you can see a pattern. So after 12 months you'd have 1000(1 + 0.01)12, which is equal to $1126.83. So you'd have $6.83 more after 12 months, if you invested $1000, which is 0.6% more. Sure, maybe $6 isn't a lot of money, but if you were working for a major financial institution with $10m, then that's $68,300 in a year. A person could live on that. It should be clear that you've got to be clear about percentages and growth rates. Generalizing to

Formulas Suppose you've got some amount of money, $Z, to invest. Suppose that the money grows at a rate of r per time period (usually a year). So if at first you've got $Z then after a year you'd have $Z and $Z*r, so in total you'd have $ Z + Zr = Z(1 + r). If you re-invested this money for an additional year (two years in total) then you'd have Z(1 + r)(1 + r) = Z(1 + r)2. After three years, = Z(1 + r)3. If you put it in for T years and keep on re-investing then you'd end up with Z(1 + r)T. This is compound interest. Compound interest is often referred to as one of the most important fundamental concepts in business. This is because of the way that a small initial amount of money can grow and grow, if left to compound over a long time period. For example if you're planning to retire in 40 years you might want to start saving now. Why start now? Because, if you can save just $1000 this year, then after 40 years you'd have over $45,000 if it grew at 10%. We often might want to solve backwards: if I end up with some amount of money after a given time period, and I know how much I started from, what was the rate of growth? For instance, if I end up with $45,000 after $1000 grows at compound interest for 40 years, what is the rate of growth? [Ten percent.] To solve these sorts of financial problems, you want logarithms. (Trust me I know, you're asking, why could anyone possibly want logarithms?! Keep reading.) It makes the math simpler. In fact business applications like these were the main reason why logarithms were developed. If you remember your algebra, you know that figuring just (1 + r)2 is a bit complicated since you have to multiply it out to get 12 + 2r + r2. Doing (1 + r)3 is longer and (1 + r)12 is just masochism. But log(1 + r)12 = 12*log(1 + r). So solving backwards is not too hard if you take logs of both sides [I'm talking about natural logs, ln( ), not base-10]. In many business and economic applications, the time

period is something other than years.

It could be months or quarters or days or just overnight. (Did you know that there's a huge market

for lending money just overnight?

Why? Because night here in NYC

is day in Although we developed the formula for the growth in a bank account, it can be applied to any variable that is growing at some rate. That could be inflation or GDP or just about any other variable that changes over time. Suppose I've got some time series of observations of a variable, X. So I label these as Xt, where the t tells me what time unit. It might be the year, in which case I might have X2002, X2003, X2004, . The absolute difference is VX, which is given by VXt

= Xt Xt-1. The

percent change is %VX = Suppose that we have information that a company's sales increased from $50m to $60m in a single year. What is the growth rate? The change in sales is $60m - $50m = $10m, while the initial level is $50m so the percent growth rate is ($10m/$50m) = 0.20, or a 20% growth rate. This rate has no units sales happened to be given in units of millions of dollars, but the 20% growth rate would be unchanged if the sales had been in billions or thousands; dollars or pesos or euro; tons or hours or cubic yards. If you know calculus then you can read on; if not then come back once you've been enlightened: A final note, since I mentioned logarithms, I'll mention

their relationship to calculus and to percent growth, since so many students

miss it: the derivative of the log of x is the percent change in x. Or using the notation of %VX

to represent the percentage change in X, then |

|

Important Conditions for Competition

Depend on Secure and Complete Property Rights

- property rights are completely specified

- all property rights are exclusive (no externalities)

- property rights are transferable and enforceable

In considering these necessities, recall Arrow's Theorem of Second Best: a system of property rights that satisfies most (but not all) of the conditions is not necessarily better than a system satisfying fewer conditions counting up the satisfied assumptions does not measure how near are the outcomes.

Markets

Microeconomic theory proves the First Welfare Theorem, which guarantees that a competitive market economy (with complete property rights and no transactions costs) is Pareto efficient meaning that we can't make any person happier without impairing someone else. This is a big reason why economists believe that markets are generally the best way to distribute resources.

In a perfect economy people don't need to understand all the implications of their consumption on different resources; they only need to know the price. The price is the sole sufficient indicator of scarcity. So much energy is expended by modern consumers trying to balance off different criteria, even for simple choices like a lightbulb. An incandescent bulb uses 'too much' energy relative to a fluorescent, but fluorescent bulbs usually contain mercury (hazardous disposal), other types of bulb might consume particular resources (rare earth metals) in being made. How ought consumers to trade off greater electricity usage versus mercury contamination? A consumer can be left swamped with information! But in a perfect economy consumers only need to look at the price. Clearly we don't live in a perfect economy.

But many resources are already included in the price of even the most quotidian consumption item. When we choose to buy an apple we needn't worry about whether the farmer has sufficient land or uses the proper fertilizer, or if the wholesaler has a good enough inventory-control system, or if the retailer uses scarce real estate optimally. We just choose whether or not to buy it. It's only when we try to trade off between organic apples or locally-grown apples or fair-trade apples or whatever that's difficult, because there's no single scoring system.

In a system of optimal economic competition, the price reveals relative scarcity. If supply is low relative to demand then the price will be high; if supply is great relative to demand then the price is low. Early economists often wrote about the apparent incongruity that water, necessary for life, was available for free while diamonds, not necessary for anything, were expensive. Why this apparent paradox? Because of their relative scarcity. (And thus marginal utility, but that's for later.)

Recall supply and demand graph, plus PS, CS, DWL, so competition maximizes total surplus.

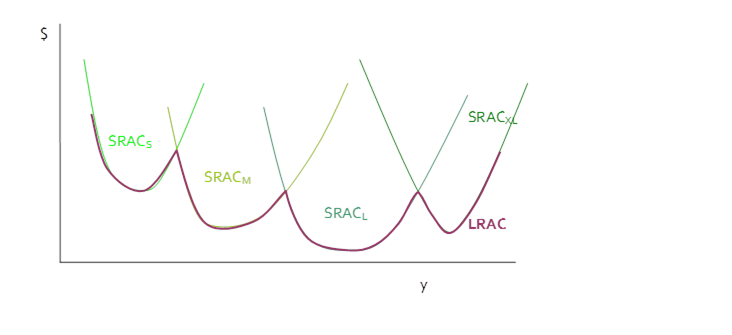

In production, supply prices in a perfectly competitive industry are determined from the minimum point of average total cost this is the long-run industry supply curve. Firms compete to supply each commodity for the lowest price, meaning that they try to economize on inputs (use the fewest and cheapest possible).

Over a longer time period, firms will direct their Research & Development (R&D) budgets towards economizing on items which are most scarce (i.e. have high prices) again, just because it's profitable for them to do so.

Markets are extraordinarily powerful. Recall that many countries experimented with central planning (called Communism) and that was a disaster. The best efforts by very smart people (motivated, at times, by fear for their lives) were not enough to supply even a fraction of the goods that could be provided by a market economy. Wise policy will use markets wherever possible. However markets are neither all-powerful nor omniscient. There will be cases where the simple assumptions underlying the Welfare Theorems are no longer valid, particularly where there are substantial amounts of goods with imperfect property rights (with externalities) and/or substantial transactions costs. Bob Solow, the Nobel-prize-winning economist, refers to the free-marketeers who see the doughnut while the interventionists see the hole (Solow 1974 AER).

Externalities

Externalities are cases of imperfect property rights. If my decision to consume some item has an impact on someone else, then who owns that spillover effect? This can be particularly acute in trying to resolve intertemporal or intergenerational allocations what if my decisions affect people who will not even be born until the next century?

(Paul Krugman blogged about Pigou, the English

economist who first theorized about externalities.)

Examples. Smoking carries an externality: my choice to inhale smoke means that people near me will also inhale smoke. That consumption choice imposes a negative externality. Other consumption choices might impose positive externalities: economists have found significant positive externalities from education, so your decision to get more education will tend to raise the wages that your family and people around you will get. Externalities can arise from production as well as consumption. A factory belching smoke imposes negative externalities on those down-wind. A flower farm might impose positive externalities (more commonly, a beehive kept by someone who wants honey will have positive externalities because the bees can pollinate other flowers of fruits or vegetables). There can be positive or negative externalities; these externalities can arise in production or consumption.

Hanley, Shogren, & White quote Ken Arrow, that an externality is

a

situation in which a private economy lacks sufficient incentives to create a

potential market in some good, and the nonexistence of this market results in a

loss of efficiency.

Each word is essential: "lacks sufficient incentives" makes clear that it's not necessarily about technologies but organizations, "potential market" notes that even a possible market has effects (threat of entry or calls/puts), and the final phrase makes clear that not every market failure is insoluble and requires government action.

A a lack of a positive externality can be considered a negative and vice versa.

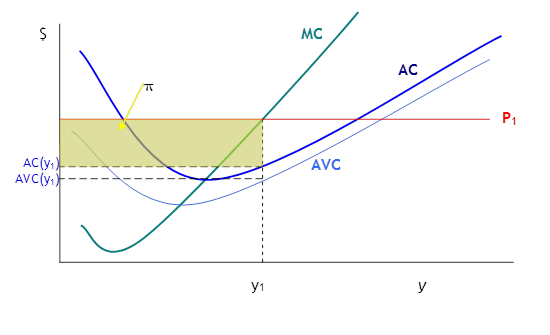

Negative externalities of production produce marginal external costs (MEC) above Marginal private costs (MC, the supply curve). Since these MEC are external to the firms they do not enter into a private firm's calculations of profit maximization so the private firm produces until P=MC. But this creates a deadweight loss since at this level the total social costs (MSC = MEC plus MC) are greater than the price, which measures the marginal benefits that people attach to this good. So it costs society more to produce than people value it, which is DWL. Graphically,

So in this case government intervention can reduce or eliminate DWL. A tax that is just equal to the MEC, or a regulation that limits industry output to Y*, would reduce the DWL to exactly zero. Consumers should pay more, P*, since that is the true cost. These taxes are called Pigou taxes after the economist who proposed them originally.

Examples of marginal social costs over and above the marginal private costs are pollution. Decades ago, a firm generating waste might simply dump it into the nearest river. This raised costs for other firms downstream if they needed clean water. (Where by 'firms' I'm including government operations for instance drinking-water treatment plants.)

Externalities loosen the case that individual maximization behavior will inevitably lead to social maximization. Consider the simple case of conversation at a party or bar: you want to talk with someone but there's so much noise that you have to speak loudly to be heard. As everyone in the bar makes this same choice, the general level of noise must rise and so everyone must, again, choose to speak even louder.

Generally externalities break down the argument that all government intervention must produce deadweight loss. Of course government actions are determined by politicians and so are often heavy-handed or even completely wrong, but this must be determined carefully and on the particular facts of each case. General statements, of the sort that politicians and newspaper editorials make, that all taxes are bad or all regulation is wrong these statements are pure foolishness.

This is the basis for economists suggesting, for example, higher taxes on gasoline. Greg Mankiw, who advised President G W Bush, has a "Pigou Club" of economists lobbying for higher fuel taxes for just this reason (http://gregmankiw.blogspot.com/2006/10/pigou-club-manifesto.html). [Note: Mankiw is a clear communicator, which got him into trouble, since his views about the advisability of a gas tax, plus his views that 'outsourcing' is not really a problem, didn't mesh with that administration's overall message. I can disagree with him on many policy issues but still admire him for being intellectually honest in this case even when it was not in his best interest!]

A positive externality in production would shift marginal external costs to the right of marginal cost, creating a different DWL triangle because there would now be insufficient production.

Sometimes government intervention in "strategic industries" or to subsidize R&D is justified by this argument. Any single firm might have relatively high costs but the total social cost is lower, so government intervention (subsidizing production) might be justified.

Research into some area, say the basic biological science

behind pharmaceuticals, is expensive.

There are important knowledge spillovers so a breakthrough in a

particular area is likely to lower costs for the whole industry. If you've had a class in Urban Economics you

know that many firms choose their location based on these sorts of knowledge

spillovers. Government-sponsored

research in the

Externalities in demand would shift the marginal social benefit curve to the left or to the right of the marginal private benefit (demand) curve. Positive externalities of demand are "bandwagon" effects or "network" effects Facebook is popular because 'everybody' has a FB account so MySpace died (and Google + limps along). Negative externalities of demand are congestion effects when the iPhone was introduced on AT&T's network, the huge demands for bandwidth slowed down everybody's phone. City traffic has this effect.

So in each case, a tax or price/quantity restriction can actually reduce the deadweight loss and make everybody better off.

Vertical Sum not

Horizontal

Unlike the case of private demand where the market demand is the horizontal sum of the individual demands, the SMWP is the vertical sum of each individual's marginal willingness to pay (MWP). Because the nature of the externality means that the consumption is shared, we don't add up how many are demanded by each individual, at a given price. Rather we ask, if society were to consume one more unit (such consumption would be shared by many individuals), how much each individual would be willing to pay and add up each individual's marginal willingness to pay.

These items can be positive or negative: I might be willing to pay something for public consumption of some good, or I might be willing to pay an amount to avoid the public consumption of that good.

Rival and/or Excludable Goods versus Pure Public Goods

A problem with providing public goods is that everybody tends to wait around for someone else to do the hard work. The idea is that, if the problem impacts somebody else, then that person might do the hard work and then I can just take the externalities get the benefits without any of the costs. For example the global campaign to restrict carbon emissions suffers from this free rider problem: every country wants the other countries to take all the pain.

We can generally distinguish goods as either excludable or non-excludable and either rival or non-rival (in any combination).

Excludable goods mean that the technology exists to keep other people from using my stuff kids fight in order to make their toys excludable, a mass of laws against theft and robbery help me keep my stuff excludable. Non-excludable is the opposite: I can't keep people from using it. Perhaps it's an architecturally lovely building that every passer-by can enjoy. Or the neighbor without curtains. Intellectual property law exists to try to make certain goods excludable.

Rival means that someone else's consumption of the good interferes with my own. If someone else eats my cookie then I can't eat it cookies are rival. Non-rival is the opposite. Sometimes these distinctions are a bit arbitrary: parents don't understand why kids can't share toys, "If you're not playing with it now, why is it a problem if the other child plays with it now?" just like many people would consider their jewelry rival (even though the same argument could apply but almost nobody, really, rents jewelry for a night out. The bling is only valuable if it's yours.).

Economists label goods that are non-rival and non-excludable "pure public goods." These are often goods that are provided by governments. Police and fire protection are difficult to exclude (both because of externalities) and, given the infrequency of occurrences, are basically non-rival. There are private security guards but these are not as common as police. National defense is non-excludable and non-rival.

But other goods, which the

While these goods are not provided publicly, their peculiar character means that pricing must take different forms. Radio stations play advertisements if they broadcast for anyone; satellite radio makes their product excludable by encoding the broadcasts and selling the decoders. You can think of many more examples.

But in general, whereas we were able to prove the First and Second Welfare Theorems, in the case of no externalities and perfect property rights, to show that private markets produce Pareto-optimal outcomes, this is no longer the case when there are externalities or imperfect property rights. Markets are best wherever possible but they are not always possible.

This does not mean that every externality demands government intervention! Markets are dynamic and give participants incentives to figure out ways to exclude rivals, as the examples above clearly show. TV stations originally broadcast over the airwaves to everyone; now cable and satellite broadcasts require de-coders. Music companies are slowly trying to figure out how to exclude copying of their products (or figure out other ways of getting revenue right now ringtones are supporting the labels!). Internet radio like Pandora or last.fm are complicating; Apple's iTunes store crunches the music companies' margins but offers greater security.

There are also cases where private citizens will join together and voluntarily restrict their own choices. Buying a coop or condo means that you agree to be bound by the decisions of a managing board, exactly in order to keep others from imposing externalities on you. If one person doesn't maintain his unit then the board has a legal basis to force the owner to make improvements. Business Improvement Districts (BIDs) have some of this character.

Free Rider Problem

People have an incentive to 'free ride' on other people's willingness to pay. Each would want the other consumer to pay more. I might claim that, actually, my preferences are not like my neighbor's; my neighbor cares greatly about the quality of the public good while I hardly care at all so my neighbor should pay most of the cost. My neighbor, of course, will likely make the same claim.

Consider common debates about public taxation levels. Some people want the government to levy higher taxes and provide more services; others want lower taxes and fewer services. (In defiance of the facts, the former group would more commonly be associated with Democrats and the latter with Republicans.) Sometimes lower-tax supporters will assert, "Well, if you want higher taxes, why don't you start by volunteering to pay more tax yourself?" The public good argument and marginal-willingness-to-pay argument shows why that argument is fallacious.

This problem, of consumers having an incentive to "fake" their marginal willingness to pay for an item, does not occur in the case of private goods, because for ordinary goods, if I don't pay the price I don't get to consume the item. If I go to the coffee shop and offer just 20 cents for a cup of coffee, they won't give it to me. But with a public good, I have an incentive to try to get my neighbor to pay for the public good so that I can consume it for free.

|

|

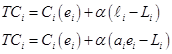

Advanced: The Consumer's

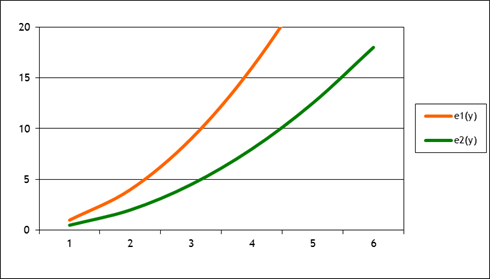

Problem for the case of Externalities Economics investigates many cases of externalities; some of these relate directly to the environment. My decision to purchase organic food might help the people who live near the farmer's fields (which no longer are sprayed with dangerous chemicals). Or externalities could relate to networks or other non-environmental issues. But for now consider two consumers choosing between two goods, x and y, where y is a pure public good (define) that would only be provided by some external organization (like a government). How much of the public good should be provided? Or, equivalently, how much would the two people be willing to spend? This decision can be enormously complicated if we worry too much about income effects and complementarities among goods. If the free public goods are mp3 files of top music, provided by the internet, then my marginal utility for these goods might depend quite heavily on my possession of an iPod or computer. More seriously, there has been a lengthy debate on the degree to which people demand environmental services as they get wealthier. But for now we start simply and work our way up. For any ordinary good we can graph a consumer's demand curve: the marginal benefit gained by consuming one more unit of the good. In general this demand curve will slope downward due to diminishing marginal utility.

For a public good we can ask the same question: what is the marginal willingness to pay by the consumer for a one more unit of this public good? Again, this will generally slope downward.

This can be again caricatured as the demand curve for the public good, although it has significant differences from a typical demand curve crucially, that payment for public goods can be difficult to arrange. One utility function, which is easy to work with, is the

quasi-linear utility, where x is typically interpreted as a composite good (a

basket of ordinary consumption items) with price normalized to one and y is

the public good, The marginal condition, that Note that this is not the total value attached to the public good, just the willingness to pay for an additional unit more that's why it's called Marginal. This is just the same as the case with ordinary private goods: the fact that I willingly pay $1 for another cup of coffee does NOT imply that I would give up all of my coffee intake for $1, only that my caffeine consumption is already high enough that I would only pay $1 for yet another cup. Now suppose there were two people who could consume this public good. How much would these two people be willing to pay for this public good?

This basic principle applies whether the public goods have

positive or negative externalities.

Basically, the lack of a bad thing can be considered a good thing, for

example if trash piling up is a bad then we can redefine and set trash

collection as a good (last year this was a pressing concern in Of course this assumes that there is some way to get people to reveal how much they'd be willing to pay for these public goods. This can be difficult Person 1 would willingly pay 0.5 in order to get 1 unit of the public good, y which assumes that the other person is also paying 0.5. If there is not a full unit of the public good provided then Person 1 would not be optimizing. Person 2 will get utility from the public good provided by Person 1, even if Person 2 contributes nothing. Consider now the case of two consumers with slightly

different preferences: now person 2 has quasi-linear utility of the form How could these two people find this out? They have no incentive to tell the truth because they have no way of finding out the other person's true utility function. What levels would be chosen, if the people were choosing

individually? For simplicity we'll

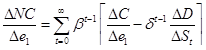

return to the case of two identical individuals with Notate the amount of the public good bought by an individual y; the amount of the public good that others have already bought is Y (capital letter). Each unit purchased costs price p. So an individual consumes an amount (Y + y) of the public good and (x - py) of the private good (since after paying for y units of the public good she has only that much income left over for spending on x). With the given utility function this is But how much would be produced, if the people could get

together and agree on an optimal social amount (somehow read each others'

minds to find out how much they'd be willing to pay)? Now people would maximize their utility, Compare this amount with the private solution amount to

see that So while there will generally be some private provision of the social good, this will generally be much smaller than the amount that would be socially optimal. And the size of this divergence will grow bigger when there are more people sharing the externality. You should be able to do this same analysis with a

different utility function, such as Cobb Douglas. For this, In our society probably the most common method of determining optimal social policies is voting, which will not in general produce optimal results but might be satisfactory. Recall the Arrow Impossibility Theorem which stated that democracy is not rational; also Churchill's "democracy is the worst form of Government except all those other forms that have been tried from time to time." If people's preferences have some homogeneity (they're not too diverse) then voting can even be optimal. Society has created a wide array of institutions that counteract the problems that arise from externalities. At one point these were largely based on sociological mores and traditions. Now many are contractual; in some cases governments have stepped in to formalize particular legal constructions from the modern corporation to housing co0ps, condominium associations, business improvement districts, and so on. The formal analysis mirrors the Nash game of oligopoly: although each participant would like to buy more Y (or charge a higher price), they do not do this because they assume that others would not be so 'public spirited' as to also buy more Y (or charge a high price) so they compete. It is like a Prisoner's Dilemma. Return to the case of two identical

individuals with We could simplify this as a Prisoner's Dilemma:

But we need to fill in the Utility values in each

bin. We assume that each person has a

budget of 1; the amount of good x that is chosen is simply the remaining

budget. Setting Y=1 implies that each

chooses y=1/2 so x=1/2 and So this gets us this Prisoner's Dilemma table:

So "Compete" is a dominant strategy. As typical with this analysis, it could be extended to multiple interactions, complete with reputational games, random strategies, etc. |

Coase Theorem

The Coase Theorem specifies why we link transactions costs with imperfect property rights: in the absence of transactions costs, many imperfections in property rights (many externalities) will be properly priced and so may be produced at Pareto optimal levels.

Consider the case of two neighbors sharing a building. One is a bar, which, in the course of ordinary business, produces loud music and loud people. The other is a laboratory which operates best without noise or vibrations; as these levels increase the lab must spend more money to shelter its experiments. Starting from zero noise, the bar gets a significant marginal benefit (MB) from the first few decibels of noise, however the marginal benefit falls as the level of noise rises. The lab can, with low cost, abate low levels of noise but its costs rise as it tries to abate more and more noise. Costs avoided are net benefits so we can consider this as a marginal benefit to the lack of noise: a small lack of noise has a small marginal benefit but as the noise rises the marginal benefit rises. So we can draw their respective marginal benefits (MBL to the lab and MBB to the bar) to different levels of noise (N):

Suppose that the level of noise were initially to be at some high level, N1. Then the lab must be spending a large amount of money to abate the noise, MBL(N1), while the bar gets a much lower marginal benefit from the noise, MBB(N1).

If, instead, there were a low level of noise, N2, then the lab could abate it at low cost, MBL(N2), while the bar would place a high marginal value (MBB(N2), a high marginal profit) for making more noise.

If there are clear property rights then the participants can trade. It may not matter if the law establishes that businesses have a right to silence or if the law establishes that businesses can make as much noise as they want in either case the parties can then trade. If there is no clear law, either because there are no clear precedents or enforcement is capricious, then the two sides have an incentive to fight.

But suppose, for example, zoning laws mandate silence so that the lab has "ownership" of the lack of noise. In this case the lab can supply certain levels of noise by buying noise-reduction, so MBL is a supply curve of noise. The bar would like to buy up the right to make a certain amount of noise, so MBB is a demand curve. If we begin from cacophony, where the initial level of noise is at a high level such as N1, then the lab would clearly want to lower the noise level: the last increment of noise could be sold at only a low price, MBB(N1), but it costs the lab much more, MBL(N1), to abate that noise. It will enforce a lower noise level. But not necessarily complete silence.

If, instead, the noise level were at a whisper, at an amount like N2, then the bar would be willing to pay a large amount, MBB(N2), to be noisier, while the lab could abate that noise at a small cost, MBL(N2), so it would be profitable to sell the noise, buy the abatement technology, and make a profit from the difference. This will continue until the noise level reaches an equilibrium level, N*, where the marginal benefits to each side are balanced.

![]()

If, on the other hand, there were no restrictions on noise emissions, then the bar would have the right to emit as much noise as it chose. We can think of the bar as now supplying silence (the absence of noise, measured backwards on the horizontal axis) and the lab demanding silence. Since we're flipping the horizontal axis this gives a downward sloping demand (the MBL) and upward sloping supply (MBB).

![]()

If the amount of noise were at cacophony N1, then there would again be an incentive for trading: the bar could make a profit since it could reduce noise at only a small cost while the lab would be willing to pay a large amount for that reduced noise. If the noise were at a whisper, N2, then the bar would find it profitable to emit more noise, and the lab could not "outbid" it since the bar would demand a high price of MBB(N2) while the lab would only be willing to pay MBL(N2).

The big insight is that no matter whether the lab has a right to silence or if the bar has a right to noise, the final amount of noise is unchanged at N*. The initial allocation of property does not change the outcome. All that changes is the direction of money payments: if the lab has a right to silence then the bar will pay it for the amount N*; if the bar has a right to make noise then the lab will pay it. The direction of the flow of money changes but not the amount of noise chosen. This was the insight of Coase. He did not believe that zero transactions costs were universal or even common, but his insight clarifies how the problems of externalities might be solved by private transactions.

Note that this result depends on the absence of "income effects" which, while reasonable in the case of firms (without financing constraints) might not be as reasonable for consumers. If poor people must buy a lack of pollution then they might not have enough income.

This also assumes that both sides to the transaction have continuous and monotonic marginal benefit schedules. If either MB curve were not continuous, i.e. with jumps, then the price might not be fully determined but the two sides should be able to bargain. If either MB curve were not monotonic then there could be multiple equilibrium points, such as this:

![]()

So there can be many complications but the central insight is that we should concentrate on transactions costs.

From the Coase viewpoint, transactions costs are equivalent to unclear (or insecure) property rights. What would happen if, in the above example of the lab and the bar, the noise were made by cars going by (ones tricked out with the bass speakers thumping, or Harley motorcycles with their distinctive roar)? The lab would have a difficult time either enforcing silence (if it had that right) or paying the passing vehicles (if they had a right to make noise). Similarly if there is one noisy bar annoying large numbers of adjacent apartment-dwellers then it would again be difficult either for the neighbors to get together to pay the bar to lower the noise (if the bar had the right to make noise) or for the bar to compensate them each.

In air pollution discussions, this is the difference between "point sources" and "non-point sources" since point sources of pollution (like large power plants) are easily identified while non-point sources (like every car) are much more difficult to effectively regulate.

With unclear property rights, if the noise level just happened to be at N1 but there could not be trading, then there would be deadweight losses equivalent to the shaded triangle DWL1; if the noise just happened to be at N2 then without trade the deadweight losses would be the other shaded triangle, DWL2.

![]()